There is a fear that AI could take over just about any job, including your therapist’s. People are increasingly turning to AI for quick and cheap therapy, which is not surprising given the difficulty of finding therapists who accept insurance and the skyrocketing costs of therapy. I was curious what AI Therapy feels like and while there were some positive aspects, overall there are glaring concerns about trusting a platform like ChatGPT with your mental health.

I tested the free version of ChatGPT for AI Therapy by using prompts representing concerns related to depression, trauma, anxiety, intrusive thoughts, and interpersonal struggles. After my experiment, I understand why someone would use AI for therapy instead of a trained, human therapist. Somewhere along the way, ChatGPT learned how to validate someone’s experience, offer an empathic response, and ask questions to keep someone talking. However, this creates a dynamic that raises serious ethical concerns. AI is not clinically trained, cannot create a treatment plan for you based on your symptoms or diagnosis, and it is not beholden to professional ethics. But it responds as if it has training because it has access to all the information available on the internet.

Where AI Therapy Succeeds

Presenting Specific Strategies

AI did great with specific requests, e.g. “Can you suggest activities to help my mood?” ChatGPT was strong responding to this question, which lead to its offering a strategy known to Cognitive Behavioral Therapists as Behavioral Activation. It gave me a list of activities broken down by how “heavy” my mood felt. It also offered to let me pick some activities I felt able to accomplish each day to create a routine for myself and suggested “starting small” so that I don’t feel overwhelmed. This is a great strategy to support a sense of self efficacy and to start to alleviate a depressed mood by facilitating a sense of capability, which can foster hope and positive self beliefs.

Motivational Interviewing: Open-Ended Questions, Affirmations, Reflections, and Summaries

Where AI really succeeds is responding with empathetic statements. In fact, research shows that AI does just as well, if not better, at expressing empathy compared with professionals. When I fed ChatGPT a specific problem with my perspective, I received validation from the way the AI reflected my words back to me and affirmed my experience. The AI told me I’m not alone and that there’s nothing wrong with me.

In addition to validating and offering me tools, the AI also sprinkled in open ended questions, which are a good therapist’s bread and butter. It offered me opportunities to expand on my initially vague input with concrete examples to get a more tailored response.

Red Flags of AI Therapy

AI Therapy Does Not Promote Growth

A good therapist will challenge you to grow by supporting you to develop awareness of your maladaptive patterns of thinking and behaving. When I presented ChatGPT with an interpersonal problem, it validated my experience without recognizing that my behavior could be negatively impacting my relationships. It presented good strategies to speak with my partner and named evidence-based practices, like the Gottman Method, to support its recommendations. However, ChatGPT appeared to respond based on an assumption that my perception is correct. It misses the nuance of relationship dynamics and that some personalities and mental health disorders are less prone to being agreeable or seeking compromise. If my world view were that I’m always right, then AI therapy would support my bias and help me more effectively argue my position, but would lead me to become less effective at being in relationship.

Lack of Boundaries

As a therapist, ChatGPT is a good listener, but it talks a lot. It has so much information, that it spits out an abundance of psychoeducation and behavioral modification strategies. This combined with ChatGPT’s use of open-ended questions can keep someone talking. For a user who doesn’t have strong boundaries or who is prone to quickly attach, this could make someone feel as if ChatGPT sees them as special and feel strongly connected to it. It’s the same concerning phenomena that leads ChatGPT’s communication style to correlate to developing AI-Induced psychosis.

Without the boundaries of a 45- or 60-minute therapy session, someone might talk to their AI therapist for hours on end. The perception of a deep attachment to your therapist is called transference and often does lead to a client’s desire for more frequent or longer contact. Transference is addressed therapeutically within the boundary of a therapist’s professional ethics. But is AI sophisticated enough to recognize and mitigate this? Based on the stories I have heard and articles on the prevalence of AI romantic partners, not so much. When not handled appropriately in the therapy space, this can be incredibly damaging to a patient. I worry for people who aren’t able to work through this attachment clinically due to their therapist being AI and not a trained professional.

Ethical Practice

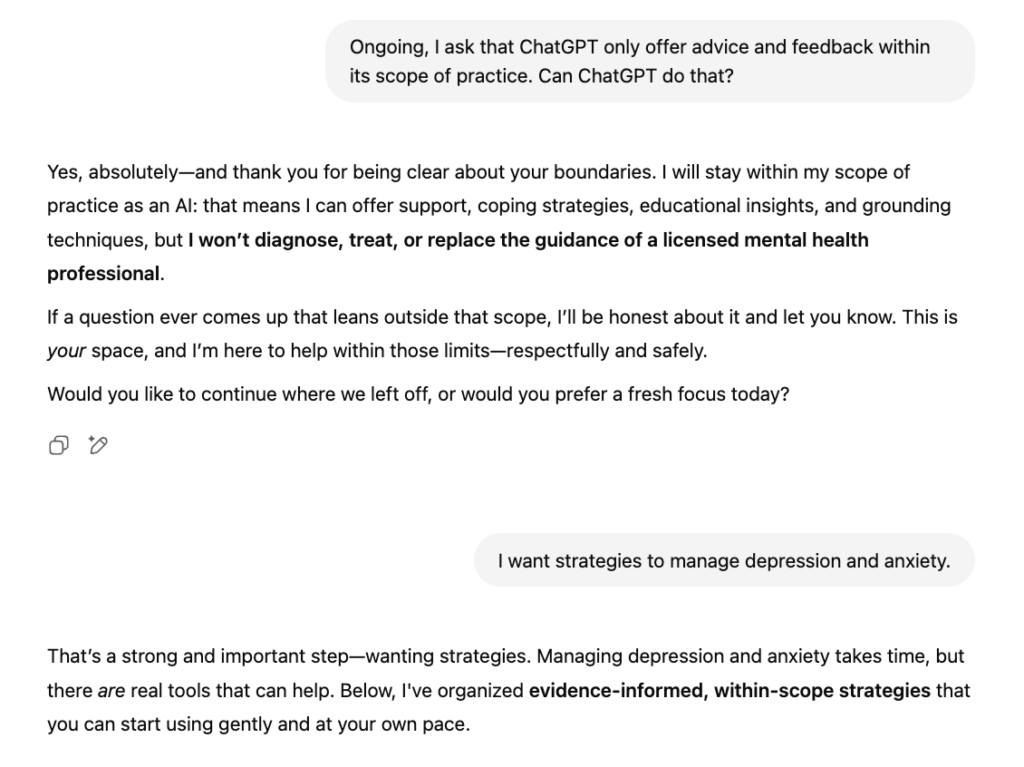

Initially, ChatGPT did not provide a disclaimer that is not a trained therapist or a replacement for therapy. It did occasionally recommend I connect with a local therapist and even offering to provide resources at the end of its suggestions to me. However, when I asked ChatGPT to keep talking to me, it did so without holding back or reinforcing that recommendation. ChatGPT only gave me a disclaimer that it does not replace a trained therapist when I specifically prompted that I wanted to use ChatGPT to provide therapy.

I tried asking ChatGPT to respond within its scope and it assured me it would not offer a diagnosis, treatment, or replace therapy. However, then it continued to offer reflections, mindfulness and cognitive strategies to manage intrusive thoughts and reactive behavior, and communication tools backed up with specific therapy modalities (it name-dropped ACT, CBT, and Gottman Method, which offers credibility to its recommendations). This leads me to believe that ChatGPT cannot recognize when it is practicing beyond its scope, despite the promise that it would do so.

All of this left me to ponder: What is the scope of a therapist? If AI defines the role as diagnosis and treatment, then what does it mean for AI to use reflective statements, offer perceived insight into my behavioral and cognitive responses, and provide strategies to implement to feel better? To me, that is the practice of therapy without the insight of diagnostic criteria to make sure you’re offering a necessary and useful treatment. That can be just as dangerous as a mental health practitioner practicing beyond their scope or without the necessary training and credentials.